2D Object Tracking - Experiments

In this blog post, I’ll be sharing my journey of exploring 2D object tracking, a vital area in computer vision. I’ll delve into its definition, evaluation metrics, state-of-the-art techniques, and practical experiments. Each section will provide insights into different aspects of 2D object tracking, along with the tools and methodologies I’ve learned along the way.

Definition of 2D object tracking

2D object tracking is a fundamental task in computer vision that involves locating and following objects of interest across consecutive frames in a video sequence. Unlike object detection, which focuses on identifying objects within individual frames, object tracking aims to maintain the identity of objects over time as they move within the scene. 2D object tracking is a subsection of multi-object tracking (MOT). This is particularly crucial in applications such as surveillance, autonomous driving, and sports analysis.

Key Components of 2D Object Tracking:

Detection: The process begins with detecting objects of interest in the initial frame of the video. This can be achieved using various object detection algorithms such as YOLO, Faster R-CNN, or SSD.

Initialization: Once objects are detected, they are assigned unique identifiers to track them across frames. This initialization step establishes the correspondence between objects in the first frame and their representations in subsequent frames.

Localization: The main task of object tracking is to estimate the position and size of each object in every frame of the video. This involves predicting the object’s location based on its previous state and motion model, as well as incorporating information from the current frame.

Data Association: As the video progresses, objects may change appearance, occlude each other, or temporarily disappear from view. Data association techniques such as Kalman filters, Hungarian algorithm, or deep learning-based methods are used to link objects across frames and maintain their identities reliably.

Tracking Quality Assessment: Finally, the performance of the tracking system is evaluated using various metrics to ensure accurate and robust object tracking. These metrics assess factors such as tracking accuracy, robustness to occlusions and clutter, and computational efficiency.

Challenges of multi-object tracking

Tracking multiple objects in videos presents unique challenges that require specialized solutions. Here are some common scenarios:

Occlusion: Objects may be partially or fully obscured by other objects or the environment.

Identity Switches: When multiple objects cross paths, it can be challenging to assign identities to each object accurately.

Motion Blur: Fast-moving objects or camera motion can cause motion blur, making it challenging to visually identify objects.

Viewpoint Variation: Objects viewed from different angles may appear significantly different, posing a challenge for visual identification.

Scale Change: Objects may change in size over time, making it difficult to maintain accurate tracking.

Background Clutter: Similarities between object and background textures can confuse tracking algorithms, leading to tracking failures.

Illumination Variation: Changes in lighting conditions can affect the visual appearance of objects, making tracking challenging.

Low Resolution: Tracking performance may degrade in low-resolution videos, impacting the accuracy of object tracking.

Addressing these challenges is essential for developing robust multi-object tracking systems, emphasizing the importance of incorporating motion models and historical data into tracking algorithms to overcome limitations in visual tracking.

Evaluation metrics

In evaluating the performance of 2D object tracking algorithms, it’s crucial to consider various error types that might arise. These errors provide insights into the accuracy and reliability of tracking systems. Here, we delve into the key evaluation metrics and the underlying concepts:

Error Types in MOT:

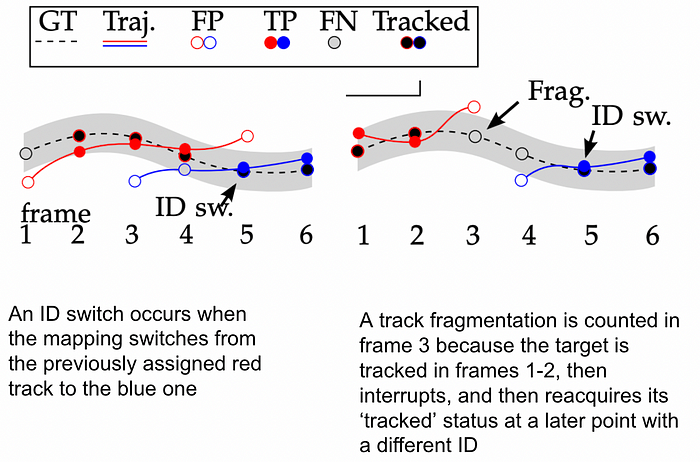

Multiple-object tracking (MOT) evaluation metrics address five main error types:

- False Negatives (FN): Instances where ground truth objects exist but are missed by the tracker.

- False Positives (FP): Occurrences where the tracker predicts objects that have no corresponding ground truth.

- Merge or ID Switch (IDSW): When two or more object tracks are erroneously swapped due to close proximity.

- Deviation: Instances where an object track is reinitialized with a different ID.

- Fragmentation: Occurs when a track abruptly stops being tracked despite the ground truth track still existing.

Figure 1: Types of error in MOT [1]

Figure 1: Types of error in MOT [1]

Bijective Matching and Association:

In MOT evaluation, matching is typically performed at a detection level, ensuring a one-to-one mapping (bijective) between predicted and ground truth detections. This bijective matching approach ensures each ground truth detection is matched to at most one predicted detection, and vice versa. Matching requires spatial similarity, often measured using Intersection over Union (IoU) scores.

Association, on the other hand, refers to the assignment of IDs to detections over time. Identity switches (IDSWs) occur when a tracker wrongly assigns different identities to objects or when a track is lost and reinitialized with a different identity.

Main MOT Metrics:

Multi-Object Tracking Accuracy (MOTA) [2]: MOTA quantifies tracking performance by considering the overall accuracy of the tracker, including false positives, false negatives, and ID switches. It emphasizes spatial similarity between detections and ground truth. This metric is part of the CLEARMOT metrics.

Multi-Object Tracking Precision (MOTP) [2]: MOTP measures the localization accuracy of the tracker, averaging the overlap between correctly matched predictions and ground truth. It provides insights into the precision of object localization. This metric is part of the CLEARMOT metrics.

Identification Metrics (IDF1) [3]: IDF1 focuses on association accuracy rather than detection. It calculates a bijective mapping between ground truth and predicted trajectories, considering identity true positives and false positives.

High Order Tracking Accuracy (HOTA) [4]: HOTA provides a unified metric evaluating detection, association, and localization accuracy. It categorizes tracking errors into detection, association, and localization errors, offering a comprehensive assessment of tracker performance.

These metrics collectively offer a comprehensive evaluation of multi-object tracking algorithms, considering various aspects such as detection, association, and localization accuracy. Understanding these metrics is essential for assessing and comparing the performance of different tracking systems effectively.

State of the art

In the realm of 2D object tracking, tracking by detection has emerged as a prominent approach. This methodology involves first detecting objects in each frame of a video and then associating these detections across frames. The association process relies on analyzing the location, appearance, or motion characteristics of detections to match them accurately. Tracking by detection has gained widespread adoption, primarily driven by the rapid development of reliable object detectors. Within this framework, key algorithms such as SORT, DeepSORT, and ByteTrack have made significant contributions, advancing the state of the art in 2D object tracking. Let’s explore each of these algorithms in detail to understand their principles and impact on the field.

SORT: Simple Online and Realtime Tracking [5]

SORT, standing for Simple Online and Realtime Tracking, was introduced in 2016, and quickly establish itself as a standard in the field of object tracking. Developed with a focus on simplicity and efficiency, SORT’s primary objective was to create the fastest possible tracker while relying on the quality of object detector predictions. Unlike some other methods, SORT does not utilize appearance features of objects; instead, it relies solely on bounding box position and size for tracking.

SORT employs two classical methods:

Kalman Filter: Responsible for motion prediction, the Kalman Filter predicts the future positions of tracks based on previous states.

Hungarian Method: Used for data association, the Hungarian method matches new predictions with tracks based on Intersection over Union (IoU) metric.

The operation of SORT can be summarized as follows:

- An object detector provides bounding boxes for the initial frame (T=0).

- For each predicted bounding box, a new track is created.

- The Kalman Filter predicts new positions for each track.

- In the subsequent frame, the object detector returns bounding boxes.

- These bounding boxes are associated with track positions predicted by the Kalman Filter.

- New tracks are created for unmatched bounding boxes.

- Unmatched tracks can be terminated if they are not matched to any detection for a specified number of frames (Tlost).

- Matched tracks and new tracks are passed to the next time step, and the process repeats.

DeepSORT: Simple Online and Realtime Tracking with a Deep Association Metric [6]

DeepSORT represents an extension of the SORT algorithm, introducing appearance features to enhance tracking performance. Unlike its predecessor, DeepSORT incorporates a simple Convolutional Neural Network (CNN) extension to extract appearance features from bounding boxes. This addition proves particularly beneficial in scenarios involving occlusions, where traditional methods may struggle to maintain accurate tracking.

One significant advantage of DeepSORT is its ability to re-identify objects even after prolonged occlusion periods. This capability is made possible by maintaining a gallery of appearance descriptors for each track. By calculating cosine distances between new detections and stored descriptors, DeepSORT can reliably match objects, even when temporarily obscured from view.

Central to DeepSORT’s operation is the concept of track age, which measures the number of frames since the last association. This metric plays a crucial role in the association process, guiding the algorithm’s decision-making. DeepSORT adopts a cascade approach, prioritizing tracks with lower ages over a single-step association between predicted Kalman states and new measurements. This strategy helps improve tracking accuracy, especially in complex environments with frequent occlusions and clutter.

ByteTrack: Multi-object Tracking by Associating Every Detection Box [7]

ByteTrack emerges as a recent addition to the repertoire of object tracking algorithms, introducing a straightforward yet effective optimization for the data association step. Traditionally, many methods discard detections with low confidence scores, assuming them to be unreliable or potentially spurious. While this approach helps mitigate false positives, it can pose challenges in scenarios involving occlusions or significant appearance changes.

ByteTrack tackles this issue by incorporating all detections into the tracking process, regardless of their confidence scores. This inclusive approach ensures that no potentially relevant information is overlooked, thereby improving tracking robustness in challenging conditions.

The algorithm operates in two distinct steps:

High-Confidence Detections: Detections with high confidence scores are associated with tracks using either intersection-over-union (IoU) or appearance features.

Low-Confidence Detections: Detections with low confidence scores are still considered for association, albeit with a more conservative approach. In these cases, only IoU is utilized for data association. This cautious strategy acknowledges the higher likelihood of spurious or inaccurate detections among low-confidence samples, ensuring that tracking remains robust and reliable.

MOTChallenge

MOTChallenge stands as a crucial initiative in the field of multiple object tracking, offering a standardized platform for evaluating and benchmarking tracking algorithms. Launched with the goal of advancing the state of the art in object tracking, MOTChallenge provides researchers and practitioners with a diverse set of datasets, evaluation metrics, and competitions to assess the performance of their tracking algorithms.

The benchmark datasets offered by MOTChallenge encompass a wide range of real-world scenarios, including varying object densities, occlusions, and camera viewpoints with static or dynamic camera motion. By providing access to these diverse datasets, MOTChallenge enables comprehensive testing and validation of tracking algorithms across different environmental conditions.

Moreover, MOTChallenge defines standardized evaluation metrics, such as Multiple Object Tracking Accuracy (MOTA), Multiple Object Tracking Precision (MOTP), and Identity F1 Score (IDF1), ensuring consistency and comparability in performance assessment across different algorithms and datasets.

Experiments

In this section, I’ll outline the experiments conducted to evaluate and compare various 2D object tracking algorithms using MOTChallenge datasets and tools provided by Ultralytics YOLOv8 and the RoboFlow supervision package.

My journey began with implementing pre-trained object detection algorithms, such as YOLOv8 from Ultralytics, following this tutorial. The objective of this tutorial is to track and count vehicles passing in a highway. Through this tutorial, I learned how to obtain detection results and create trackers using state-of-the-art object tracking algorithms like ByteTrack. Additionally, I explored the built-in tracking algorithms in the YOLOv8 model provided by Ultralytics, supporting ByteTrack and BoT-Sort tracking algorithms. The tutorial also involved utilizing the RoboFlow supervision package, a Python package comprising various computer vision tools such as bounding box annotation, formatting, labeling, video writing, line counting, and other useful tools. Both the Ultralytics package for using pre-trained YOLOv8 models and the supervision package proved to be user-friendly and immensely helpful in tasks like finetuning models, generating results, and annotating images and videos. The result from this tutorial can be seen in the following videos:

- Vehicle counting using YOLOv8 from Ultralytics with ByteTrack. The annotations and line counting were made with Supervision by RoboFlow.

- Vehicle tracking using YOLOv8 with built-in ByteTrack. The annotations were made with Supervision by RoboFlow.

To evaluate the performance of these algorithms, I discovered the TrackEval repository, the official evaluation code for several MOT benchmarks:

- RobMOTS

- KITTI Tracking

- KITTI MOTS

- MOTChallenge

- Open World Tracking

- PersonPath22

This repository offers a range of family metrics, including:

- HOTA metris (HOTA, DetA, AssA, LocA, DetPr, DetRe, AssPr, AssRe)

- CLEARMOT metrics (MOTA, MOTP, MT, ML, Frag, etc)

- identity metrics (IDF1, IDP, IDR)

Utilizing this repository, along with insights gained from articles on MOT metrics, I learned how these metrics are employed for assessing tracking performance.

The MOTChallenge datasets, containing ground truth annotations and detection outputs from state-of-the-art object detectors, served as the foundation for my experiments. I prepared and processed the data to utilize the pre-trained YOLOv8 with ByteTrack that I had implemented. This involved developing scripts to convert image sequences into videos and performing inference on image sequences to ensure compatibility with the MOTChallenge datasets.

Once the groundwork was laid, I focused on outputting tracker results to a .txt file in the required format for MOTChallenge evaluation. The required format includes:

frame, id, bb_left, bb_top, bb_width, bb_height, conf, x, y, z

The conf value contains the detection confidence in the det.txt files. For the ground truth, it acts as a flag whether the entry is to be considered. A value of 0 means that this particular instance is ignored in the evaluation, while any other value can be used to mark it as active. For submitted results, all lines in the .txt file are considered. The world coordinates x,y,z are ignored for the 2D challenge and can be filled with -1. Similarly, the bounding boxes are ignored for the 3D challenge. However, each line is still required to contain 10 values. All frame numbers, target IDs and bounding boxes are 1-based. Here is an example:

Figure 2: MOTChallenge format example

Figure 2: MOTChallenge format example

Subsequently, I employed the TrackEval script to compute tracking metrics for the results generated by the pre-trained YOLOv8 with ByteTrack model. However, the initial results revealed discrepancies, notably negative MOTA and MODA scores, prompting further investigation into potential causes.

To address these discrepancies, I compared the performance of our tracking algorithm with baseline results obtained from the TrackEval repository, particularly those generated by MPNTrack for the MOT16 dataset. Visual analysis showed that MPNTrack was more consistent with ground truth annotations, thus achieving better results in MOT metrics. The negative MOTA and MODA scores were attributed to the higher number of false negatives compared to the total ground truth annotations. This issue was primarily due to annotations disappearing during occlusions, unlike in the ground truth data where annotations persist even with occlusions. For comparison, we present the ground truth and the results for the MOT16-04 video:

- Original MOT16-04 video

- MOT16-04 with pedestrians ground truth annotations

- MOT16-04 with MPNTrack tracker results provided by TrackEval repository

- MOT16-04 with YOLOv8 with ByteTrack tracker results

In conclusion, these experiments have yielded valuable insights into the effectiveness of various tracking algorithms and how MOT metrics operate and can be used. These insights will be invaluable as we delve deeper into the core investigation of this Ph.D.: developing end-to-end perception algorithms. The source code of the experiments conducted for this task is available on GitHub.

nuImages

Unfortunately, the nuImages dataset solely provides annotations for 3D object tracking utilizing point clouds. As a result, it is unsuitable for evaluating our 2D object tracking algorithms. Consequently, my attention has been solely directed towards utilizing the datasets provided by MOTChallenge, as outlined above.

What’s next?

In the next phase, our focus will shift towards 3D object detection. Initially, we’ll explore 3D object detection in images, incorporating 3D bounding boxes, which introduces additional complexity due to orientation considerations. Following this, we will delve into 3D object detection utilizing point clouds, further advancing our understanding and capabilities in this domain.

References

[1] A. Milan, L. Leal-Taixe, I. Reid, S. Roth, and K. Schindler, “MOT16: A Benchmark for Multi-Object Tracking,” pp. 1–12, Mar. 2016, [Online]. Available: https://arxiv.org/abs/1603.00831

[2] K. Bernardin and R. Stiefelhagen, “Evaluating Multiple Object Tracking Performance: The CLEAR MOT Metrics,” EURASIP J. Image Video Process., vol. 2008, pp. 1–10, 2008, doi: 10.1155/2008/246309.

[3] E. Ristani, F. Solera, R. Zou, R. Cucchiara, and C. Tomasi, “Performance Measures and a Data Set for Multi-target, Multi-camera Tracking,” in Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics), vol. 9914 LNCS, no. c, 2016, pp. 17–35. doi: 10.1007/978-3-319-48881-3_2.

[4] J. Luiten et al., “HOTA: A Higher Order Metric for Evaluating Multi-Object Tracking,” Int. J. Comput. Vis., vol. 129, no. 2, pp. 548–578, Sep. 2020, doi: 10.1007/s11263-020-01375-2.

[5] A. Bewley, Z. Ge, L. Ott, F. Ramos, and B. Upcroft, “Simple online and realtime tracking,” in 2016 IEEE International Conference on Image Processing (ICIP), Sep. 2016, vol. 2016-Augus, pp. 3464–3468. doi: 10.1109/ICIP.2016.7533003.

[6] N. Wojke, A. Bewley, and D. Paulus, “Simple online and realtime tracking with a deep association metric,” in 2017 IEEE International Conference on Image Processing (ICIP), Sep. 2017, vol. 2017-Septe, pp. 3645–3649. doi: 10.1109/ICIP.2017.8296962.

[7] Y. Zhang et al., “ByteTrack: Multi-object Tracking by Associating Every Detection Box,” in Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics), vol. 13682 LNCS, 2022, pp. 1–21. doi: 10.1007/978-3-031-20047-2_1.

[8] N. Aharon, R. Orfaig, and B. Bobrovsky, “BoT-SORT: Robust Associations Multi-Pedestrian Tracking,” no. 2, 2022, [Online]. Available: https://arxiv.org/abs/2206.14651